Last Updated on September 19, 2025

Keyword research is the backbone of any successful SEO strategy. It helps you understand what your target audience is searching for and how to optimize your content to meet their needs. However, manual keyword research can be time-consuming and may not always capture the full picture. This is where automation comes in, specifically using N-Gram analysis, Python and Natural Language Processing for SEO keyword research.

In this blog we will extract all primary, secondary, and Latent Semantic Indexing (LSI) keywords from Google’s first page results in one go. Then we will enhance this data with the nuanced understanding that NLP provides. Our innovative Python script doesn’t just scrape keywords; it intelligently analyzes them. By utilizing N-Gram analysis, we’re able to identify not just individual keywords, but meaningful phrases that your competitors are ranking for. We then apply NLP techniques to filter and refine these keywords, ensuring that we’re capturing the most relevant and impactful terms for your SEO strategy.

Understanding N-Gram Analysis

Before we dive into the code, let’s understand what N-Grams are. An N-Gram is a contiguous sequence of n items from a given sample of text or speech. In the context of keyword research:

- 1-gram (Unigram): Single words (e.g., “marketing”)

- 2-gram (Bigram): Two-word phrases (e.g., “inbound marketing”)

- 3-gram (Trigram): Three-word phrases (e.g., “digital marketing strategy”)

N-Gram analysis allows us to identify patterns in keyword usage, helping us understand not just individual keywords, but also how they’re commonly combined in phrases.

Understanding Natural Language Processing(NLP)

Natural Language Processing, or NLP, is a branch of artificial intelligence that focuses on the interaction between computers and human language. In the context of our automated keyword research, NLP plays a crucial role in refining and understanding the extracted keywords. It goes beyond simple string matching to analyze the semantic meaning and context of words and phrases. By leveraging NLP techniques, our script can identify the intent behind searches, understand synonyms and related concepts. This allows us to filter out irrelevant terms and focus on keywords that truly resonate with human language patterns. For instance, NLP can help distinguish between homonyms (words that are spelled the same but have different meanings), understand idiomatic expressions, and recognize emerging language trends. By incorporating NLP into our keyword research process, we’re not just collecting data – we’re gaining insights into the way users actually think and search, enabling us to create more targeted and effective SEO strategies.

Read More: How to Utilize NLP to Optimize Your SEO

Setting Up Your Python Environment

To get started, you’ll need to set up your Python environment with the following libraries:

googlesearch-python: For scraping Google search resultstrafilatura: For extracting text content from web pagesnltk: For natural language processing tasks

You can install these libraries using pip:

!pip install google

!pip installtrafilatura

!pip installnltk

Automating the Keyword Research Process

Let’s break down the Python script that automates our keyword research:

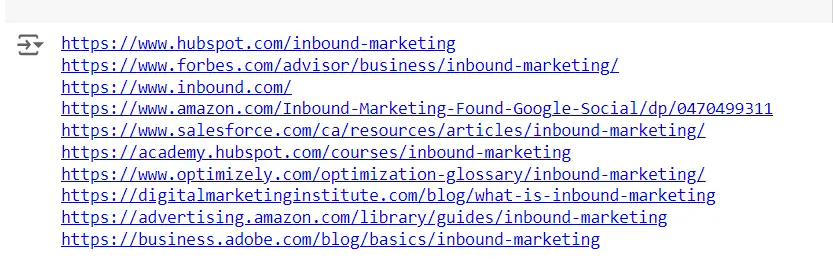

1. Fetch URLs from google

First, we define a function to fetch URLs from Google search results:

def getResults(uQuery, uTLD, uNum, uStart, uStop):

try:

from googlesearch import search

except ImportError:

print("No module named 'google' found")

# What are we searching for

query = uQuery

# Prepare the data frame to store urls

d = []

for j in search(query, tld=uTLD, num=uNum, start=uStart, stop=uStop, pause=2):

d.append(j)

print(j)

return d

results_1 = getResults(uQuery_1, "com", uNum, 1,uNum)

2. Extract Keywords and perform n-gram analysis

Next, we have our main function that extracts keywords:

def extract_keywords(urls):

one_gram = []

two_gram = []

three_gram = []

# List of stopwords

stop_words = set(stopwords.words('english'))

print(stop_words)

stop_words.update(set('0123456789'))

for rank, url in enumerate(urls, start=1):

try:

downloaded = trafilatura.fetch_url(url)

text = trafilatura.extract(downloaded)

if text:

# Clean the text

text = re.sub(r'[^\w\s]', '', text).lower()

words = text.split()

# Determine the rank category

rank_category = "top 3" if rank <= 3 else "4-10"

# Extract n-grams

for i in range(len(words)):

one_gram.append((words[i], rank_category))

for i in range(len(words) - 1):

two_gram_candidate = words[i] + ' ' + words[i + 1]

two_gram.append((two_gram_candidate, rank_category))

for i in range(len(words) - 2):

three_gram_candidate = words[i] + ' ' + words[i + 1] + ' ' + words[i + 2]

three_gram.append((three_gram_candidate, rank_category))

except Exception as e:

print(f"Error processing {url}: {e}")

This function does the heavy lifting:

- It crawls the URLs we’ve fetched

- Extracts text content

- Performs N-Gram analysis (1-gram, 2-gram, and 3-gram)

- Filters out stopwords

- Counts keyword frequencies

3. Filter stopword, frequency count and categorization

It processes the extracted n-grams, filtering out common stopwords and categorizing the results based on their source – either the top 3 search results or positions 4 through 10. This categorization allows us to identify which keywords are most strongly associated with top-ranking content. The function uses Python’s Counter objects to efficiently tally occurrences and ultimately returns a list of tuples, each containing an n-gram and its frequency in both categories. This data structure enables us to perform further analysis on the relative importance of different keywords and phrases in high-ranking versus lower-ranking content, providing valuable insights for SEO strategy development.

def filter_stopwords_and_count(ngram_list):

ngram_counts_top3 = Counter()

ngram_counts_4to10 = Counter()

for ngram, category in ngram_list:

if all(word not in stop_words for word in ngram.split()):

if category == "top 3":

ngram_counts_top3[ngram] += 1

else:

ngram_counts_4to10[ngram] += 1

ngram_dict = {}

all_ngrams = set(ngram_counts_top3.keys()).union(set(ngram_counts_4to10.keys()))

for ngram in all_ngrams:

count_top3 = ngram_counts_top3.get(ngram, 0)

count_4to10 = ngram_counts_4to10.get(ngram, 0)

ngram_dict[ngram] = (ngram, count_top3, count_4to10)

return list(ngram_dict.values())

one_gram_counts = filter_stopwords_and_count(one_gram)

two_gram_counts = filter_stopwords_and_count(two_gram)

three_gram_counts = filter_stopwords_and_count(three_gram)

return {

'one_gram': one_gram_counts,

'two_gram': two_gram_counts,

'three_gram': three_gram_counts

}

4. NLP processing

Finally, we use these NLP to filter our irrelevant keywords. We will use first vectorize each keyword and then apply cosine similarity (>50%) to identify keywords related to search query.

def calculate_cosine_similarity(keyword_data, uQuery_1):

# Initialize the model

model = SentenceTransformer('paraphrase-MiniLM-L6-v2')

# Vectorize the user query

query_embedding = model.encode(uQuery_1, convert_to_tensor=True)

def get_similar_keywords(n_grams):

# Extract n-grams, their frequencies, and sort them

n_gram_list = [ngram for ngram in n_grams]

# Vectorize n-grams

n_gram_texts = [ngram[0] for ngram in n_gram_list]

n_gram_embeddings = model.encode(n_gram_texts, convert_to_tensor=True)

# Calculate cosine similarities

cosine_scores = util.pytorch_cos_sim(query_embedding, n_gram_embeddings)[0]

# Combine n-grams with their cosine similarities and frequencies

n_gram_similarity = list(zip(n_gram_list, cosine_scores))

# Filter n-grams with similarity > 60%

similar_keywords = [(ngram[0], ngram[1], ngram[2], similarity.item()) for ngram, similarity in n_gram_similarity if similarity.item() > 0.5]

return similar_keywords

# Get similar keywords for each category

similar_one_gram = get_similar_keywords(keyword_data['one_gram'])

similar_two_gram = get_similar_keywords(keyword_data['two_gram'])

similar_three_gram = get_similar_keywords(keyword_data['three_gram'])

# Return the results in a similar structure to keyword_data

similar_keyword_data = {

'one_gram': similar_one_gram,

'two_gram': similar_two_gram,

'three_gram': similar_three_gram

}

return similar_keyword_data

4. Display output

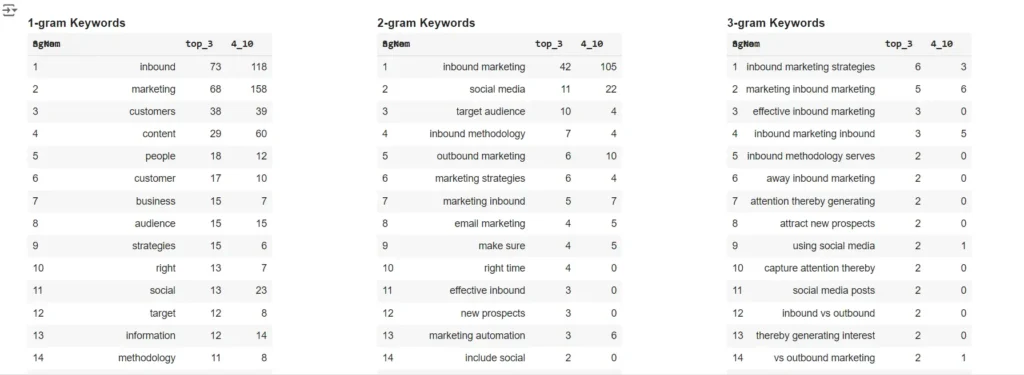

Finally, we will display all of the relevant keywords in a table format. Following is the output before NLP analysis:

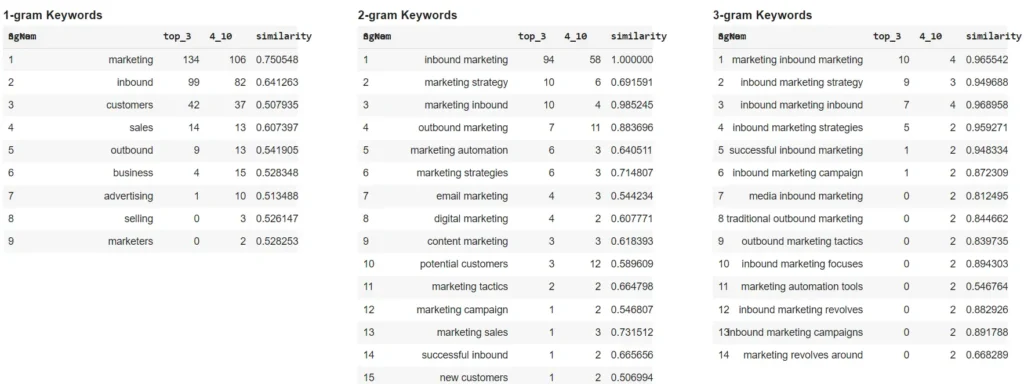

and following is after NLP analysis:

Practical Example: Keyword research via N-Gram analysis

Let’s look at the results of our analysis for the query “inbound marketing”:

1-gram keywords:

market: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5901

businesss: Top 3 Frequency = 1, 4-10 Frequency = 1, Similarity = 0.5181

marketer: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5018

advertising: Top 3 Frequency = 1, 4-10 Frequency = 10, Similarity = 0.5135

promotional: Top 3 Frequency = 1, 4-10 Frequency = 1, Similarity = 0.5341

marketing: Top 3 Frequency = 134, 4-10 Frequency = 106, Similarity = 0.7505

sales: Top 3 Frequency = 14, 4-10 Frequency = 13, Similarity = 0.6074

selling: Top 3 Frequency = 0, 4-10 Frequency = 3, Similarity = 0.5261

marketers: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.5283

withinbound: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5631

distribute: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5031

marketingapproved: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6115

business: Top 3 Frequency = 4, 4-10 Frequency = 15, Similarity = 0.5283

customers: Top 3 Frequency = 42, 4-10 Frequency = 37, Similarity = 0.5079

promotion: Top 3 Frequency = 2, 4-10 Frequency = 0, Similarity = 0.5027

inbound: Top 3 Frequency = 99, 4-10 Frequency = 82, Similarity = 0.6413

outbound: Top 3 Frequency = 9, 4-10 Frequency = 13, Similarity = 0.5419

2-gram keywords:

marketing teams: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.5924

overview inbound: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6469

marketing allows: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.6429

marketing email: Top 3 Frequency = 2, 4-10 Frequency = 1, Similarity = 0.5559

marketing relies: Top 3 Frequency = 2, 4-10 Frequency = 0, Similarity = 0.6747

offering inbound: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.7615

marketing focuses: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.5827

marketing wants: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6530

customers want: Top 3 Frequency = 2, 4-10 Frequency = 0, Similarity = 0.5161

marketing based: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6955

measure marketing: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6472

related businesses: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5092

audience inbound: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5151

marketing must: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6999

although inbound: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5589

phrase inbound: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5547

inbound strategy: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.7143

direct sales: Top 3 Frequency = 1, 4-10 Frequency = 1, Similarity = 0.6401

marketing typically: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.7029

customer insight: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5111

marketing takes: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.6642

potential customer: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.5695

marketing search: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.6259

sales activation: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5633

outbound marketing: Top 3 Frequency = 7, 4-10 Frequency = 11, Similarity = 0.8837

customer acquisition: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5535

and more...

3-gram keywords:

work withinbound marketing: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.8438

broader marketing strategy: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.6947

media inbound marketing: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.8125

inbound marketing vs: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.8793

automate sales processes: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5228

campaign outbound marketing: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.7475

marketing successful inbound: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.9382

marketing sales customer: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.6912

inbound marketing back: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.8978

traditional outbound marketing: Top 3 Frequency = 0, 4-10 Frequency = 2, Similarity = 0.8447

marketing must deliver: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6383

make meaningful marketing: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.6199

attracting new customers: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5763

effective inbound marketing: Top 3 Frequency = 3, 4-10 Frequency = 0, Similarity = 0.9429

experiences showing customers: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5365

inbound marketing example: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.9564

inbound marketing owned: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.8650

potential customers share: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5345

understanding inbound marketing: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.9426

businesss digital marketing: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.5992

marketing important inbound: Top 3 Frequency = 2, 4-10 Frequency = 0, Similarity = 0.9203

streams email marketing: Top 3 Frequency = 0, 4-10 Frequency = 1, Similarity = 0.5728

overview inbound marketing: Top 3 Frequency = 1, 4-10 Frequency = 0, Similarity = 0.9597

inbound marketing include: Top 3 Frequency = 2, 4-10 Frequency = 0, Similarity = 0.9538

Read more – How to Use Python for SEO

Enhancing Your SEO Strategy

Now that we have this data, how can we use it to improve our SEO strategy?

Identify primary keywords

From our results, we can see that “inbound marketing” is indeed the primary keyword, appearing frequently in both 1-gram and 2-gram results.

Discover related topics

Terms like “content marketing”, “social media”, and “email marketing” appear frequently, indicating these are important related topics to cover.

Long-tail keyword opportunities

3-gram results like “inbound marketing strategy” and “search engine optimization” provide opportunities for more specific, long-tail keywords.

Content ideation

Use the discovered keywords and phrases to brainstorm new content ideas. For example, you might create content around “inbound marketing strategies for social media”.

Optimize existing content

Review your existing content and ensure you’re adequately covering these key terms and topics.

Google Colab Code Link- AIHelperHub – NLP Serp N-Gram Analysis.ipynb

Conclusion

Automating competitor keyword research using N-Gram analysis provides a wealth of data to inform your SEO strategy. By understanding what keywords and phrases your competitors are ranking for, you can identify gaps in your own content and opportunities for improvement.

Remember, while this automated approach provides valuable insights, it should be used in conjunction with other SEO tools and strategies. Always consider user intent and create high-quality, valuable content that serves your audience’s needs.

By leveraging the power of Python and data analysis, you can stay ahead in the competitive world of SEO and inbound marketing. Happy optimizing!

- Top AI Marketing Tools in 2026 - December 2, 2025

- Best SEO Content Optimization Tools - November 13, 2025

- People Also Search For (PASF): The Complete 2025 Guide to Smarter SEO Optimization - November 11, 2025