Last Updated on September 19, 2025

In the vast landscape of the internet, ensuring that your web pages are discoverable and indexed by search engines is crucial for maximizing visibility and driving organic traffic. One powerful tool that aids in this process is the Google Indexing API. This blog post explores the significance of web page indexing, introduces the Google Indexing API, and highlights the benefits of using it with Python.

Contents

- Introduction

- Step 1: Create a Project on Google Cloud Platform Account

- Step 2: Create Service Account on GCP

- Step 3: Create an API key

- Step 4: Enable/Activate Indexing API

- Step 5: Giving owner access to service account email id

- Step 6: Preparing the excel file for URLs

- Step 7: Setup the Python environment and run the code

- Best Practices and Tips

- Conclusion

Introduction to Google Indexing API

The Google Indexing API is a service provided by Google that allows developers to directly notify Google about new or updated URLs on their website, enabling faster indexing and ensuring that fresh content is promptly available to users. It provides a convenient and efficient way to communicate with Google’s search index and automate the indexing process.

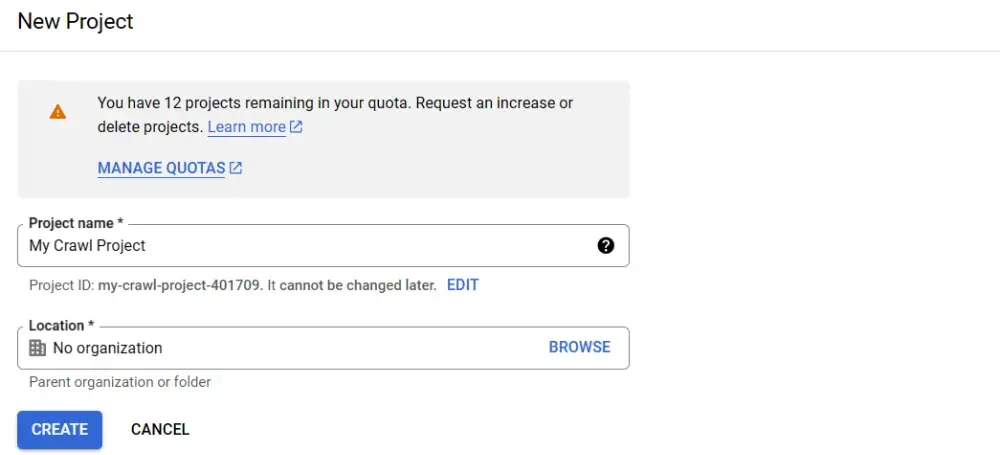

Step 1: Create a Project on Google Cloud Platform Account

You need to login via own google account to Google Cloud Platform (GCP) account and set up a project to access the Google Indexing API. For this go the link mentioned above and click on create project. Then give a name to your project and click on create.

It will open project dashboard afterwords.

Step 2: Create Service Account on GCP

Once you’ve got your project set up, the next thing to do is select it in the project section of the menu. Then, go over to IAM & ADMIN on the left side of the menu and click on ‘Service Accounts’.

After this, click on ‘Create service account’ to make your account. In the first part, give your account a name and hit ‘Create and continue’. Once that’s done, you can move on to the second step.

In the ‘Grant this service account access to project’ section, choose a role for your account. Make sure to select ‘Owner’ from the Quick access menu in the ‘Basic’ section. Click ‘Continue,’ and in the next step, just click ‘Done’.

Step 3: Create an API key

Obtain an API key or set up OAuth 2.0 credentials with the necessary permissions for authenticating requests. We will be creating an API key for the same. Following are the steps to generate API key:

- Go to this link and select your project.

- Now click on the 3 dots under Actions for service account which you have created above and click on Manage Keys.

- After that click on ADD KEY drop down and select Create new key

- Select Key type as JSON and then click on CREATE

- JSON key file will get downloaded to your computer

Step 4: Enable/Activate Indexing API

Now enable the indexing API (default it is disabled) so that we can use Google indexing service via our python code. Go to this link and click on enable Indexing API services

Step 5: Giving owner access to service account email id

To utilize the Indexing API, the first step is to set up an access account within your website’s Google Search Console. To get this done, open your Google Search Console and head to the ‘Settings’ section. Then, click on ‘Users and permissions’ and proceed to add a new user in the ‘Add user’ section. When the new page appears, input the email address you previously created via service account and adjust its permissions to ‘Owner’.

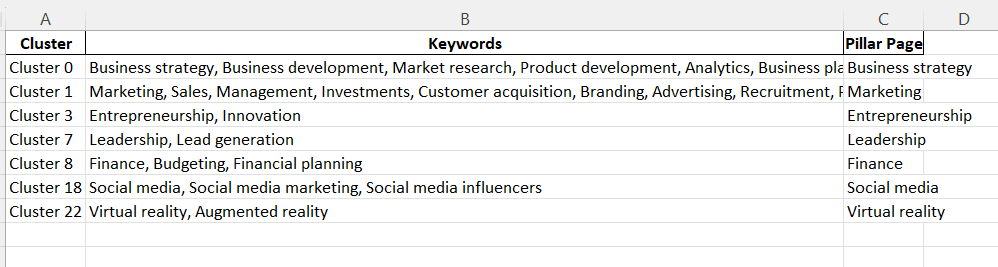

Step 6: Preparing the excel file for URLs

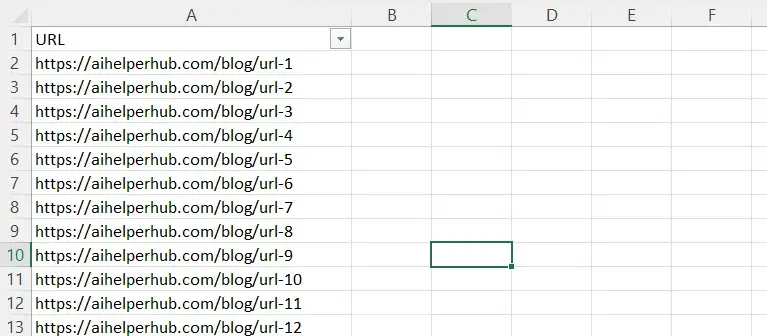

Next, you’ll want to take those URLs and save them in a excel file. Make a list of all of those URLs – any changes, new content, that you want to get indexed to google and put in it format with 1 columns – URL. Just a quick heads up, when using the Indexing API, you can only submit up to 100 links each day. Your Excel file should have a format similar to this:

Step 7: Setup the Python environment and run the code

To run python code, there are 2 options available:

- Run the code on your laptop

- Run the code on goolge colab

If you want to run the code on your laptop then you need to install python for windows along with a suitable IDE (I prefer visual studio code). If you don’t want to install anything on your system, then you can use google colab

Importing Required Libraries

In this section, we begin by importing essential Python libraries. These libraries are critical for various aspects of the script’s functionality.

oauth2client.service_account: This library enables us to work with service account credentials.googleapiclient.discovery: It allows us to build and interact with Google API services.httplib2: This library is used to handle HTTP requests, essential for communication with Google’s services.openpyxl: We use this library to work with Excel files, specifically for reading URLs from the spreadsheet.

from oauth2client.service_account import ServiceAccountCredentials

from googleapiclient.discovery import build

import httplib2

import openpyxl

Authenticating with Google Indexing API

There are two authentication methods available for the Google Indexing API:

- Using API Key: For simple authentication, you can utilize an API key generated from your Google Cloud Platform project.

- Using OAuth 2.0: OAuth 2.0 provides a more secure authentication mechanism, especially for applications that require user-level access to the API.

We have used 1st method – load the service account credentials from the JSON key file using the ServiceAccountCredentials.from_json_keyfile_name method. Then, we authorize HTTP requests with these credentials using credentials.authorize(httplib2.Http()).

credentials = ServiceAccountCredentials.from_json_keyfile_name(JSON_KEY_FILE, scopes=SCOPES)

http = credentials.authorize(httplib2.Http())

Reading URLs from an Excel File

In this part, we use the openpyxl library to load an Excel file (EXCEL_FILE) and iterate through its rows. We extract URLs from the spreadsheet, assuming that the first row contains column names, and skip it during processing.

workbook = openpyxl.load_workbook(EXCEL_FILE)

worksheet = workbook.active

urls = []

for i, row in enumerate(worksheet.iter_rows(values_only=True), start=1):

# Skip the first row (assumed to be column names)

if i == 1:

continue

for cell in row:

urls.append(cell)

Sending URL Notifications in a Batch

Here, we iterate through the list of URLs extracted from the Excel file. For each URL, we create a URL notification request and add it to the batch HTTP request. This approach allows us to send multiple URL notifications in a single batch, reducing overhead.

Finally, we execute the batch HTTP request using batch.execute(). This step sends all the URL notification requests in the batch to the Google Indexing API for processing, ensuring that the URLs are updated in the search index.

for url in urls:

batch.add(service.urlNotifications().publish(

body={“url”: url, “type”: “URL_UPDATED”}))

batch.execute()

Full Python code for implementing Google Indexing API with Python

from oauth2client.service_account import ServiceAccountCredentials

from googleapiclient.discovery import build

import httplib2

import openpyxl

# Path to the Excel file

EXCEL_FILE = 'C:\\urls.xlsx'

JSON_KEY_FILE = 'C:\\service_account.json'

SCOPES = ["https://www.googleapis.com/auth/indexing"]

ENDPOINT = "https://indexing.googleapis.com/v3/urlNotifications:publish"

# Authorize credentials

credentials = ServiceAccountCredentials.from_json_keyfile_name(JSON_KEY_FILE, scopes=SCOPES)

http = credentials.authorize(httplib2.Http())

# Build service

service = build('indexing', 'v3', credentials=credentials)

def insert_event(request_id, response, exception):

if exception is not None:

print(exception)

else:

print(response)

batch = service.new_batch_http_request(callback=insert_event)

# Read URLs from Excel file

workbook = openpyxl.load_workbook(EXCEL_FILE)

worksheet = workbook.active

urls = []

for i, row in enumerate(worksheet.iter_rows(values_only=True), start=1):

# Skip the first row (assumed to be column names)

if i == 1:

continue

for cell in row:

urls.append(cell)

for url in urls:

batch.add(service.urlNotifications().publish(

body={"url": url, "type": "URL_UPDATED"}))

batch.execute()

Best Practices and Tips

When working with the Google Indexing API, consider the following best practices:

- Manage rate limits and handle quotas to prevent API usage limitations.

- Implement logging and error handling mechanisms to capture and handle errors gracefully.

- Monitor API usage and performance to track the effectiveness and optimize the integration.

Conclusion

By leveraging the capabilities of the Google Indexing API with Python, webmasters and developers can streamline the process of indexing web pages, resulting in improved visibility, search engine ranking, and organic traffic. The integration of the Google Indexing API empowers you to have better control over the indexing process, ensuring that your content is promptly available to users. With the potential for increased discoverability and engagement, the Google Indexing API with Python is a valuable tool for optimizing your web presence.

- Top AI Marketing Tools in 2026 - December 2, 2025

- Best SEO Content Optimization Tools - November 13, 2025

- People Also Search For (PASF): The Complete 2025 Guide to Smarter SEO Optimization - November 11, 2025