Last Updated on September 19, 2025

Contents

- Understanding SEO Keyword Clustering

- Use Cases of Keyword Clustering

- Introduction to Python Libraries for Keyword Clustering

- Applying Clustering Algorithms: A Comparison of Spacy, BERT, and RoBERTa

- Comparison of Spacy, BERT, and RoBERTa for Keyword Clustering

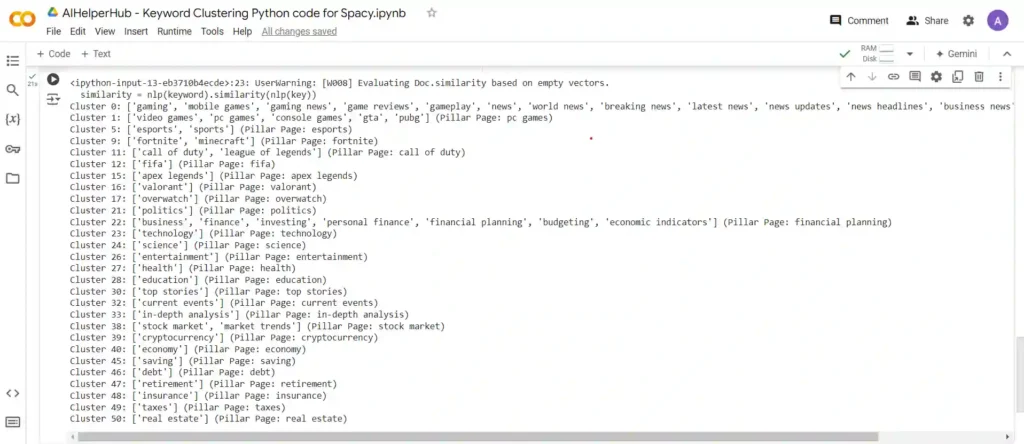

- Keyword Clustering Python code for Spacy

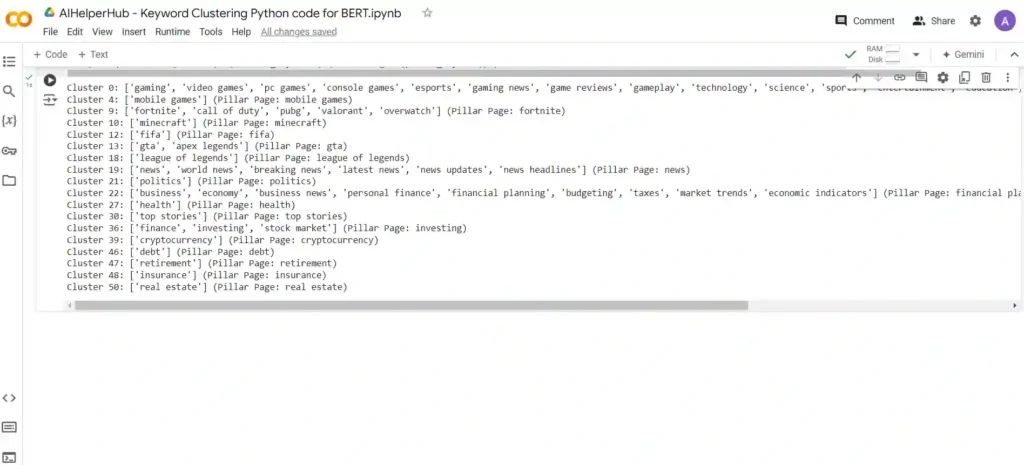

- Keyword Clustering Python code for Bert

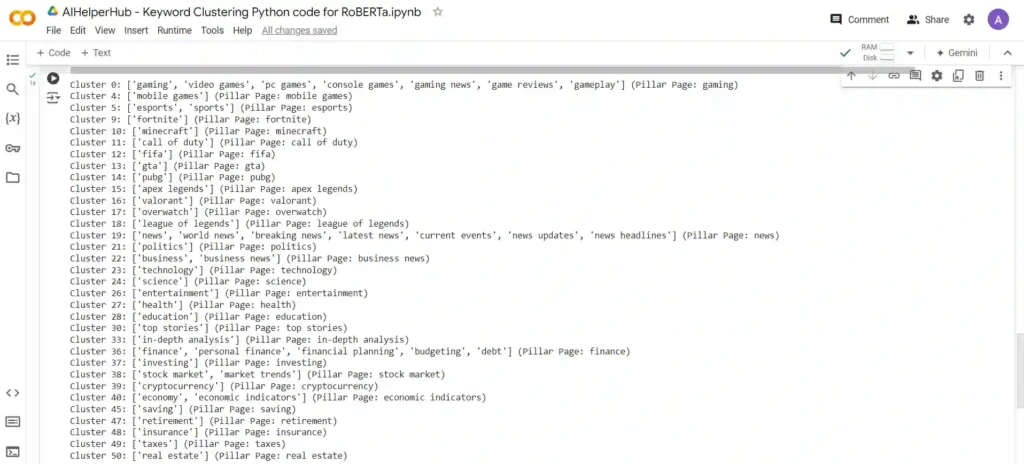

- Keyword Clustering Python code for RoBERTa

- Hybrid Approaches: Combining Clustering Algorithms

- Best Practices for Algorithm Selection and Fine-tuning

- Conclusion

In today’s digital landscape, search engine optimization (SEO) plays a vital role in improving the visibility and rankings of websites. One crucial aspect of effective SEO is keyword clustering, a technique that groups related keywords together based on their search intent. By understanding user behavior and aligning content with search intent, website owners can attract targeted traffic and enhance their organic search rankings.

In this comprehensive guide, we will explore the process of automating search intent clustering using Python, a versatile programming language with powerful data processing capabilities. Additionally, we will compare three popular algorithms—Spacy, BERT, and RoBERTa—to determine the most suitable approach for your SEO keyword grouping needs.

Understanding SEO Keyword Clustering/Grouping

Keyword clustering/grouping involves grouping similar keywords together to identify patterns and themes within a given dataset. This technique enables website owners to create targeted content that aligns with users’ search intent, ultimately improving their website’s visibility and ranking in search engine results.

To begin with keyword clustering, it is essential to collect relevant keyword data from reliable sources such as search engine reports, keyword research tools, or website analytics. Once the data is gathered, it needs to be cleaned and preprocessed to ensure accurate and meaningful clustering results.

Use Cases of Keyword Clustering

Keyword clustering is a technique used in SEO (Search Engine Optimization) and paid advertising to enhance the effectiveness of keyword targeting and improve the overall performance of marketing campaigns. Some use cases of keyword clustering in SEO and paid advertising include:

- Improved Keyword Research: Keyword clustering helps in organizing and grouping related keywords, providing insights into the overall keyword landscape. It helps identify variations, long-tail keywords, and keyword opportunities for content creation and optimization.

- Content Strategy and Optimization: By clustering keywords, SEO professionals can identify themes and topics that resonate with their target audience. It enables the creation of comprehensive content strategies and guides content optimization efforts for better search engine rankings.

- Enhanced On-Page SEO: Keyword clustering helps in optimizing web pages by mapping specific keyword clusters to relevant pages. It ensures that the content aligns with the user’s search intent and improves the page’s visibility in search engine results.

- Semantic SEO: Keyword clustering facilitates semantic analysis by identifying related terms and concepts. It helps search engines understand the context and relevance of content, leading to improved search rankings and better user experiences.

- PPC Campaign Optimization: In paid advertising campaigns, keyword clustering aids in organizing and structuring keyword lists. It helps improve the relevance of ads, increase click-through rates (CTR), and optimize ad spend by targeting specific keyword clusters that have higher conversion potential.

- Ad Group Segmentation: Keyword clustering assists in grouping related keywords into ad groups based on common themes or intent. This allows for more targeted ad copy and landing page experiences, resulting in improved Quality Scores and better campaign performance.

- Negative Keyword Identification: Keyword clustering helps identify irrelevant or negative keywords that may trigger irrelevant ad impressions or clicks. By excluding these keywords from campaigns, marketers can reduce wasted ad spend and improve campaign efficiency.

Introduction to Python Libraries for Keyword Clustering

Python offers a wide range of libraries that facilitate keyword clustering tasks. Three popular libraries for natural language processing (NLP) and machine learning—Spacy, BERT, and RoBERTa—stand out for their capabilities in handling text data and performing clustering analysis.

Spacy is a versatile NLP library that provides robust text processing capabilities. It offers various features such as tokenization, lemmatization, part-of-speech tagging, and named entity recognition, which can be leveraged to preprocess and analyze textual data for clustering purposes.

BERT (Bidirectional Encoder Representations from Transformers) is a powerful language model that revolutionized the field of natural language understanding. Fine-tuning BERT for keyword clustering tasks allows for more accurate and context-aware clustering results.

RoBERTa (Robustly Optimized BERT) is an extension of BERT that further refines its architecture and training techniques. By leveraging RoBERTa’s enhanced performance, website owners can achieve even better clustering results and gain deeper insights into search intent.

Applying Clustering Algorithms: A Comparison of Spacy, BERT, and RoBERTa

When it comes to applying clustering algorithms for keyword analysis, Spacy, BERT, and RoBERTa offer distinct strengths and capabilities.

Spacy provides an excellent starting point for keyword clustering with its extensive linguistic features. It allows for efficient data preprocessing, including tokenization, lemmatization, and named entity recognition. Additionally, Spacy offers built-in clustering functions that can be utilized for identifying similar keywords and grouping them together.

BERT, on the other hand, excels in understanding the contextual meaning of words and phrases. By fine-tuning BERT using labeled data, it becomes possible to extract more precise semantic representations and cluster keywords based on their underlying intent. BERT’s ability to capture nuanced language semantics enhances the accuracy of clustering results.

RoBERTa, an extension of BERT, further refines the language representation model and training techniques. It addresses some of BERT’s limitations and offers improved performance in various NLP tasks, including keyword clustering. Fine-tuning RoBERTa allows for even better clustering accuracy and more nuanced insights into search intent.

Comparison of Spacy, BERT, and RoBERTa for Keyword Grouping

| Criteria | SpaCy | BERT | RoBERTa |

|---|---|---|---|

| Accuracy | Provides reasonable accuracy with linguistic features. Performance may vary with dataset complexity. | High accuracy due to contextual understanding and fine-tuning capabilities. Captures subtle nuances effectively. | Improves upon BERT’s accuracy with enhanced architecture and training techniques. Particularly effective in high-precision scenarios. |

| Efficiency | Relatively efficient for smaller datasets. Built-in functions simplify the process but might be less effective for large datasets. | Efficient when using pre-trained models. Fine-tuning can be computationally intensive and time-consuming. | Similar to BERT but optimized for better efficiency. Training and fine-tuning may still be resource-intensive. |

| Contextual Understanding | Limited contextual understanding. Relies more on linguistic features and predefined rules. | Excellent contextual understanding. Captures detailed context and semantic meaning of keywords. | Enhanced contextual understanding over BERT. More accurate in capturing nuanced meanings and relationships. |

| Suitability for Different Clustering Scenarios | Suitable for smaller datasets and when linguistic features are crucial. Good for basic clustering tasks. | Shines in scenarios requiring detailed context and high precision. Ideal for complex and large datasets. | Best for scenarios needing improved accuracy and precision. Effective for both complex and large datasets with high requirements. |

| Computational Resources | Lower computational requirements. Faster for smaller datasets and simpler tasks. | Higher computational resources needed for fine-tuning. Pre-trained models are less resource-intensive but still significant. | Similar to BERT in terms of resource requirements but optimized for better performance. Still requires significant resources for fine-tuning. |

| Ease of Use | Easy to use with built-in functions for clustering. User-friendly for basic tasks. | Requires some setup for fine-tuning. Pre-trained models are easy to use, but fine-tuning requires more effort. | Easy to use with improvements over BERT. Fine-tuning process is similar to BERT but potentially more efficient. |

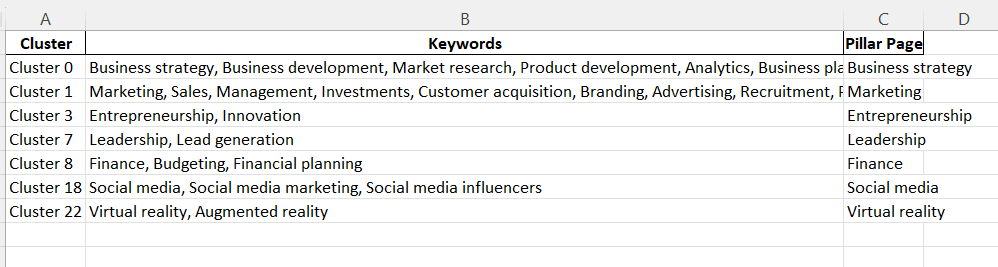

Keyword Clustering Python code for Spacy

Pre-requisites

- Pandas: The code uses the

pandaslibrary for reading and manipulating data. You can install it by runningpip install pandasin your command line or terminal. - spaCy: The code uses the

spaCylibrary for natural language processing tasks. You can install it by runningpip install spacyin your command line or terminal. - spaCy Model: The code uses the

en_core_web_lgspaCy model, which is a large English language model. You can download it by runningpython -m spacy download en_core_web_lgin your command line or terminal. - Excel File: The code assumes that there is an Excel file named “keywordcluster.xlsx” located at the path

'C:Users'. Make sure you have the Excel file at the specified location or modify the file path accordingly. - Excel File Structure: The code assumes that the Excel file contains a sheet named ‘spaCy’ where the data will be written. If the sheet doesn’t exist, it will be created. If it already exists, the data will be appended to it.

Python Code

import pandas as pd

import spacy

# Load spaCy model

nlp = spacy.load('en_core_web_lg')

# Read keywords from Excel file

df = pd.read_excel(r'C:\keywordcluster.xlsx')

# Extract keywords from DataFrame

keywords = df['Keywords'].tolist()

# Process keywords and obtain document vectors

documents = list(nlp.pipe(keywords))

document_vectors = [doc.vector for doc in documents]

# Group keywords by similarity

groups = {}

for i, keyword in enumerate(keywords):

cluster_found = False

for cluster, cluster_keywords in groups.items():

for key in cluster_keywords:

similarity = nlp(keyword).similarity(nlp(key))

if similarity > 0.7:

groups[cluster].append(keyword)

cluster_found = True

break

if cluster_found:

break

if not cluster_found:

groups[i] = [keyword]

# Identify the pillar page keyword for each cluster

pillar_keywords = {}

for cluster, cluster_keywords in groups.items():

# Calculate the centrality of each keyword in the cluster

keyword_centrality = {}

for keyword in cluster_keywords:

centrality = sum([nlp(keyword).similarity(nlp(other_keyword)) for other_keyword in cluster_keywords])

keyword_centrality[keyword] = centrality

pillar_keyword = max(keyword_centrality, key=keyword_centrality.get)

pillar_keywords[cluster] = pillar_keyword

# Print the clusters along with pillar page keywords

for cluster, cluster_keywords in groups.items():

pillar_keyword = pillar_keywords.get(cluster, "No pillar keyword found")

print(f"Cluster {cluster}: {cluster_keywords} (Pillar Page: {pillar_keyword})")

# Create a new DataFrame for the clusters and pillar page keywords

cluster_df = pd.DataFrame({'Cluster': [f'Cluster {cluster}' for cluster in groups.keys()],

'Keywords': [', '.join(keywords) for keywords in groups.values()],

'Pillar Page': pillar_keywords})

# Write the DataFrame to a new sheet in the same Excel file

with pd.ExcelWriter(r'C:\keywordcluster.xlsx', mode='a', engine='openpyxl') as writer:

cluster_df.to_excel(writer, sheet_name='spaCy', index=False)

Google Colab Code Link

Keyword Clustering Python code for Bert

Pre-requisites

- Pandas: The code uses the

pandaslibrary for reading and manipulating data. You can install it by runningpip install pandasin your command line or terminal. - Sentence Transformers: The code uses the

sentence_transformerslibrary for encoding keywords and calculating similarity. You can install it by runningpip install sentence-transformersin your command line or terminal. - OpenPyXL: The code uses the

openpyxllibrary for writing data to an Excel file. You can install it by runningpip install openpyxlin your command line or terminal. - Scikit-learn : The code utilizes the scikit-learn library for calculating cosine similarity. To install scikit-learn, you can run “pip install scikit-learn” in your command line

- Excel File: The code assumes that there is an Excel file named “keywordcluster.xlsx” located at the path

'C:Users'. Make sure you have the Excel file at the specified location or modify the file path accordingly. - Excel File Structure: The code assumes that the Excel file contains a sheet named ‘Bert’ where the data will be written. If the sheet doesn’t exist, it will be created. If it already exists, the data will be appended to it.

Python Code

import pandas as pd

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity

# Load BERT model

model = SentenceTransformer('bert-base-nli-mean-tokens')

# Read keywords from Excel file

df = pd.read_excel(r'C:\keywordcluster.xlsx')

# Extract keywords from DataFrame

keywords = df['Keywords'].tolist()

# Process keywords and obtain document vectors

documents = list(nlp.pipe(keywords))

document_vectors = [doc.vector for doc in documents]

# Group keywords by similarity

groups = {}

for i, keyword in enumerate(keywords):

cluster_found = False

for cluster, cluster_keywords in groups.items():

for key in cluster_keywords:

similarity = nlp(keyword).similarity(nlp(key))

if similarity > 0.7:

groups[cluster].append(keyword)

cluster_found = True

break

if cluster_found:

break

if not cluster_found:

groups[i] = [keyword]

# Identify the pillar page keyword for each cluster

pillar_keywords = {}

for cluster, cluster_keywords in groups.items():

# Calculate the centrality of each keyword in the cluster

keyword_centrality = {}

for keyword in cluster_keywords:

centrality = sum([nlp(keyword).similarity(nlp(other_keyword)) for other_keyword in cluster_keywords])

keyword_centrality[keyword] = centrality

pillar_keyword = max(keyword_centrality, key=keyword_centrality.get)

pillar_keywords[cluster] = pillar_keyword

# Print the clusters along with pillar page keywords

for cluster, cluster_keywords in groups.items():

pillar_keyword = pillar_keywords.get(cluster, "No pillar keyword found")

print(f"Cluster {cluster}: {cluster_keywords} (Pillar Page: {pillar_keyword})")

# Create a new DataFrame for the clusters and pillar page keywords

cluster_df = pd.DataFrame({'Cluster': [f'Cluster {cluster}' for cluster in groups.keys()],

'Keywords': [', '.join(keywords) for keywords in groups.values()],

'Pillar Page': pillar_keywords})

# Write the DataFrame to a new sheet in the same Excel file

with pd.ExcelWriter(r'C:\keywordcluster.xlsx', mode='a', engine='openpyxl') as writer:

cluster_df.to_excel(writer, sheet_name='BERT', index=False)

Google Colab Code Link

Keyword Clusterng Python code for RoBERTa

Pre-requisites

- Pandas: The code uses the

pandaslibrary for reading and manipulating data. You can install it by runningpip install pandasin your command line or terminal. - Sentence Transformers: The code uses the

sentence_transformerslibrary for encoding keywords and calculating similarity. You can install it by runningpip install sentence-transformersin your command line or terminal. - OpenPyXL: The code uses the

openpyxllibrary for writing data to an Excel file. You can install it by runningpip install openpyxlin your command line or terminal. - Scikit-learn : The code utilizes the scikit-learn library for calculating cosine similarity. To install scikit-learn, you can run “pip install scikit-learn” in your command line

- Excel File: The code assumes that there is an Excel file named “keywordcluster.xlsx” located at the path

'C:Users'. Make sure you have the Excel file at the specified location or modify the file path accordingly. - Excel File Structure: The code assumes that the Excel file contains a sheet named ‘Roberta’ where the data will be written. If the sheet doesn’t exist, it will be created. If it already exists, the data will be appended to it.

Python Code

import pandas as pd

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity

# Load RoBERTa model

model = SentenceTransformer('roberta-base-nli-mean-tokens')

# Read keywords from Excel file

df = pd.read_excel(r'C:\keywordcluster.xlsx')

# Extract keywords from DataFrame

keywords = df['Keywords'].tolist()

# Obtain sentence embeddings

document_embeddings = model.encode(keywords)

# Group keywords by similarity

groups = {}

for i, keyword in enumerate(keywords):

cluster_found = False

for cluster, cluster_keywords in groups.items():

for key in cluster_keywords:

similarity = cosine_similarity([document_embeddings[i]], [document_embeddings[keywords.index(key)]])[0][0]

if similarity > 0.5:

groups[cluster].append(keyword)

cluster_found = True

break

if cluster_found:

break

if not cluster_found:

groups[i] = [keyword]

# Identify the pillar page keyword for each cluster

pillar_keywords = {}

for cluster, cluster_keywords in groups.items():

# Calculate the centrality of each keyword in the cluster

centrality_scores = []

for keyword in cluster_keywords:

centrality = np.mean([cosine_similarity([document_embeddings[keywords.index(keyword)]], [document_embeddings[keywords.index(other_keyword)]])[0][0] for other_keyword in cluster_keywords])

centrality_scores.append((keyword, centrality))

# Select the keyword with the highest centrality score as the pillar keyword

pillar_keyword = max(centrality_scores, key=lambda x: x[1])[0]

pillar_keywords[cluster] = pillar_keyword

# Print the clusters along with pillar page keywords

for cluster, cluster_keywords in groups.items():

pillar_keyword = pillar_keywords.get(cluster, "No pillar keyword found")

print(f"Cluster {cluster}: {cluster_keywords} (Pillar Page: {pillar_keyword})")

# Create a new DataFrame for the clusters and pillar page keywords

cluster_df = pd.DataFrame({'Cluster': [f'Cluster {cluster}' for cluster in groups.keys()],

'Keywords': [', '.join(keywords) for keywords in groups.values()],

'Pillar Page': pillar_keywords})

# Write the DataFrame to a new sheet in the same Excel file

with pd.ExcelWriter(r'C:\keywordcluster.xlsx', mode='a', engine='openpyxl') as writer:

cluster_df.to_excel(writer, sheet_name='RoBERTa', index=False)

Google Colab Code Link

Hybrid Approaches: Combining Clustering Algorithms

In some cases, combining multiple clustering algorithms can yield superior results. Hybrid approaches leverage the strengths of different algorithms to overcome individual limitations and enhance the overall accuracy of keyword clustering.

Ensemble clustering techniques, for example, involve applying multiple clustering algorithms and combining their results to produce a final clustering solution. This approach reduces the impact of algorithm-specific biases and provides a more robust and comprehensive clustering outcome.

Experimentation and fine-tuning are crucial when implementing hybrid approaches. By comparing and analyzing the results obtained from different algorithms, it becomes possible to identify the best combination that aligns with your specific goals and requirements.

Best Practices for Algorithm Selection and Fine-tuning

To maximize the effectiveness of keyword clustering, it is essential to follow best practices when selecting algorithms and fine-tuning them for optimal results:

- Understand your clustering task: Clearly define the objectives, data characteristics, and performance metrics before selecting an algorithm. Different clustering scenarios may require different approaches.

- Evaluate computational resources and time constraints: Consider the available computing power and time limitations when choosing an algorithm. Some algorithms, such as BERT and RoBERTa, can be computationally demanding and may require sufficient resources for training and inference.

- Optimize hyperparameters: Fine-tune the algorithm’s hyperparameters to achieve the best performance for your specific clustering task. Experimentation and validation using appropriate evaluation metrics are essential to find the optimal configuration.

- Improve interpretability: While advanced algorithms like BERT and RoBERTa offer impressive accuracy, their results can sometimes be challenging to interpret. Consider incorporating techniques like feature importance analysis or visualization methods to enhance the interpretability of clustering outcomes.

Conclusion

SEO keyword clustering is a powerful technique for improving website visibility and organic search rankings. By automating the clustering process with Python, you can gain valuable insights into user search intent and create targeted content that aligns with their needs. If you are looking for an instant tool without having any coding knowledge then you can use this NLP based keyword clustering tool.

- Top AI Marketing Tools in 2026 - December 2, 2025

- Best SEO Content Optimization Tools - November 13, 2025

- People Also Search For (PASF): The Complete 2025 Guide to Smarter SEO Optimization - November 11, 2025